Analysis of Logging Data Quality: 5 Major Influencing Factors and Optimization Strategies

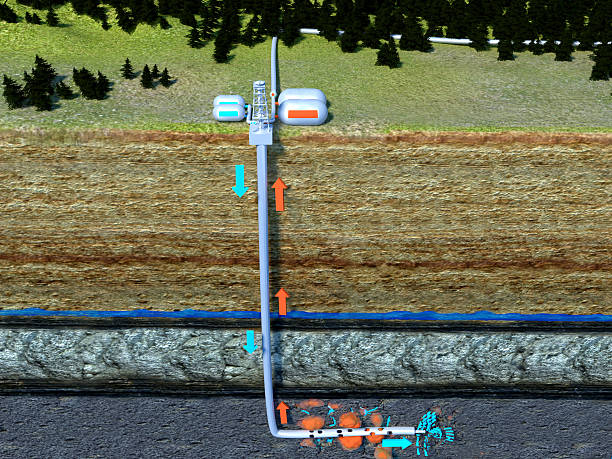

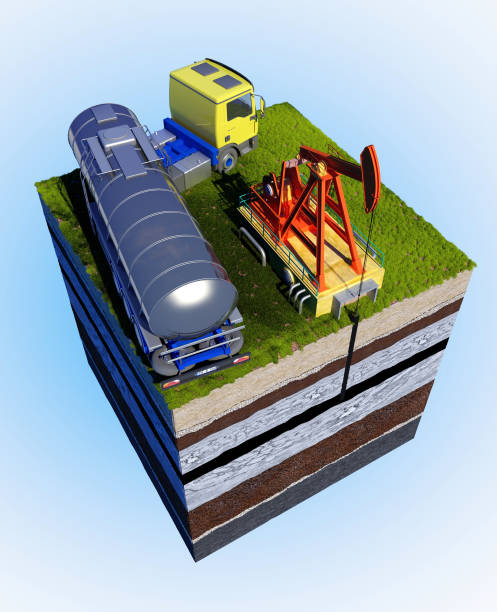

Logging is an indispensable technology in oil and gas exploration and production. Through downhole measurements, the obtained logging data can assist engineers in assessing formation properties, determining oil and gas reserves, selecting drilling routes and optimizing development strategies. However, the quality of logging data directly affects the accuracy of decision-making, and further influences the development effect of resources and economic benefits. Therefore, understanding the factors that affect the quality of logging data and taking effective measures to improve its quality are of vital importance for oil and gas exploration and development.

The Definition and Significance of Logging Data Quality

Logging data quality refers to the accuracy, reliability and repeatability of downhole data obtained through logging technology. In the process of oil and gas extraction, high-quality logging data can help determine important information such as the lithology, porosity and permeability of the formation, thereby achieving a reasonable assessment of oil and gas reserves and effective planning of production operations. Poor data quality may lead to incorrect development decisions, increase investment costs, and even affect safe production.

Meanwhile, conducting simulation tests and training with the Well Logging Simulator before actual logging can significantly enhance the response understanding of logging personnel under different strata and geological conditions, help reduce possible errors during on-site actual logging, and thereby ensure the quality of logging data.

The Main Factors Affecting the Quality of Logging Data

1. Instrument accuracy and calibration

Lack of calibration and maintenance: Instruments are not regularly calibrated with standard scales, just like an inaccurate balance, resulting in systematic errors.

Sensor aging and drift: Long-term operation in high-temperature and high-pressure environments leads to performance degradation of sensors, a decrease in response sensitivity, and data drift.

Improper instrument combination: Instruments of different principles (such as resistivity, acoustic wave, nuclear magnetic resonance) are not matched in combination or there is mutual electromagnetic interference, which affects data consistency.

Temperature and pressure resistance limit: In ultra-deep Wells or high-temperature and high-pressure Wells, if the instrument exceeds the designed working limit, the data may completely fail or be severely distorted.

2. Underground environmental conditions

Drilling fluid (mud) intrusion: This is the most common influencing factor. The mud filtrate invades the formation, altering the resistivity, hydrogen content and other properties of the original formation, and distorting the curves of resistivity, neutron porosity, etc.

The wellbore geometry is irregular: the wellbore wall collapses and shrinks in diameter, causing the instrument to be miscentered and poorly adhere to the wall, which affects the measurement accuracy of almost all logging series.

Extreme temperatures and pressures: High temperatures cause an increase in noise from electronic components, and high pressures may affect the mechanical structure of sensors. The combined effect of the two accelerates the decline in instrument performance.

Complex physical properties of strata: For instance, highly radioactive minerals affect the natural gamma curve; High-conductivity minerals such as pyrite cause abnormal decreases in the measured resistivity value.

3. Operational techniques and experience

Improper speed measurement control: The logging speed is too fast, resulting in insufficient sampling rate, which particularly affects the data quality of high-resolution instruments (such as acoustic wave and formation micro-resistivity scanning), and may lead to the loss of thin-layer information.

Depth systematic error: Cable stretching, depth wheel slippage, etc., cause depth misalignment, making the curve depths measured at different times and with different instruments unable to align, which is the “fatal flaw” for subsequent data explanations.

Non-standard operation: Uneven lowering/lifting speed of the instrument, rough operation when encountering obstacles or jamming, etc., will all introduce non-ground signals.

4. Geology and Engineering

Complex stratum structures such as fractured strata, thin interbedded strata, and strongly anisotropic strata (such as shale) render the detection and response models of conventional logging instruments ineffective.

Drilling engineering impacts: Excessive well inclination, the presence of casing or tailpipe, poor cementing quality, etc., will all restrict logging projects or seriously interfere with measurement signals.

The time passage effect: The time interval from the end of drilling to logging is too long, causing the formation pressure to recover and the fluid to redistribute, which makes the logging data unable to represent the original state.

5. Data processing and interpretation factors

Insufficient environmental correction: Environmental factors such as the wellbore, mud, surrounding rock, and instrument eccentricity were not thoroughly corrected, and the original data could not truly reflect the characteristics of the formation.

Inconsistent data standardization: Data from multiple Wells and different service companies have systematic deviations due to differences in instruments and scales. If not standardized, regional research will lose its significance.

Explanation of incorrect model selection: Using the Archie formula applicable to pure sandstone to calculate the saturation of complex argillaceous sandstone will inevitably lead to incorrect results.

How to Improve The Quality of Logging Data

In the face of the above-mentioned influencing factors, apart from relying on hardware and technology, the ability of personnel to predict, identify and handle complex situations is even more crucial. A systematic and consistent quality control system must include an efficient path for capability development.

Conduct rehearsals and training using simulators

With the powerful functions of Well Logging Simulator, the company can repeatedly practice under the conditions of “virtual downhole environment + multiple formation models + multiple logging tool responses + multiple logging scheme combinations”, thereby:

Optimize the logging program (including tool selection, parameter setting, descent speed, etc.)

Train logging engineers to be familiar with various strata and well conditions, and have contingency plans for complex well conditions

The reliability of the logging scheme is tested by simulating “abnormal well conditions/extreme formations/complex structures”

Strengthen the maintenance and calibration of instruments and tools

Regularly inspect and calibrate logging instruments to ensure their stable performance and reduce the impact of instrument failures on data quality. In addition, new logging tools are adopted to enhance measurement accuracy.

Optimize the operation process

Optimize the logging operation process to ensure that every step of the operation is strictly carried out in accordance with the standards, avoiding the impact of human operational errors on the data.

Comprehensively utilize multiple logging technologies

For complex formation conditions, the comprehensive use of multiple logging methods, such as electrical methods, acoustic methods, and nuclear magnetic resonance, can obtain more comprehensive and accurate formation information.

Strengthen data processing and analysis

By using modern data processing techniques, logging data is filtered, denoised and other processed to improve the accuracy of data interpretation. Advanced algorithms are adopted to assist in data analysis and ensure the correct identification of stratum features.

Optimize the wellbore and formation conditions

By optimizing wellbore protection measures, such as using specific mud or other technologies, the interference of the wellbore on logging data can be reduced. In addition, when choosing the wellbore location, the stability of the formation should be taken into account to avoid data errors caused by formation heterogeneity.

The Consequences of Substandard Logging Data Quality

Resource assessment error

Incorrect logging data may lead to inaccurate estimation of oil and gas reserves, which in turn affects development planning and decision-making, causing economic losses.

Increase the cost of exploration and development

Inaccurate logging data may lead to incorrect well location selection and development strategies, waste resources and time, and increase unnecessary exploration and development costs.

Reduce development efficiency

Poor data quality may lead to unnecessary problems in the oil and gas production process and reduce production efficiency.

Affect safe production

Incorrect downhole data may increase the safety risks of operations such as drilling and fracturing, and may even lead to accidents.

Frequently Asked Questions (FAQ)

Q: How to quickly determine the impact of mud intrusion on resistivity logging?

A: Usually, it can be identified by comparing the resistivity curves at different detection depths (such as shallow, medium, and deep resistivity). If the resistivity of deep detection is significantly higher than that of shallow detection, it indicates that there is mud filtrate intrusion, and the resistivity of the original formation is even higher. Intrusion correction is required. In logging simulation training, different intrusion profiles can be set up, and the morphological characteristics of the curves can be repeatedly observed and compared to quickly accumulate diagnostic experience.

Q: Which curves are most affected by logging speed?

A: It has the greatest impact on curves with high sampling rate requirements and slow dynamic response, such as acoustic full-wave series, nuclear magnetic resonance (NMR), and formation micro-resistivity scanning imaging (FMI), etc. Excessive speed can cause waveform distortion or image blurring, as well as the loss of thin layers and fine features. By using simulation software, different speed measurement parameters can be easily set, and the curve differences of the same formation under different measurement speeds can be intuitively compared, thereby deeply understanding the speed limit in the operation procedures.

Q: How can logging data from different service companies be compared and used?

A: The key lies in data standardization. Select a regionally stable standard layer (such as thick mudstone, dense limestone), and statistically analyze and normalize the logging response values (such as gamma, neutron, and density values) of each well in this layer to the same reference, thereby eliminating systematic deviations caused by instrument and scale differences. In a simulated training environment, it is possible to deliberately assign response deviations of different instrument systems to the same “stratum”, allowing trainees to personally complete the standardized processing procedures and master the core technical points.

Conclusion

The quality of logging data is a comprehensive reflection that runs through the entire process of instruments, well sites, operations, geology and processing. Any oversight in any link may lead to the consequence of “a miss is as good as a mile”. Modern logging quality control has shifted from post-event remediation to pre-event prevention and process control, and is developing towards intelligent and practical training. By systematically managing the above-mentioned influencing factors and leveraging advanced logging simulation tools to transform theoretical cognition into practical capabilities like muscle memory, we can fundamentally enhance the team’s quality, ensure that these geological “eyes” are bright and sharp, and provide the most solid and reliable data foundation for oil and gas exploration and development decisions.